CVPR 2024 Embodied AI Workshop PRS Challenge: Human-centered In-building Embodied Delivery (Learn More)

by Peking University & Apple & PRGS

What is Polar Research General Services Organization?

Polar Research General Services (PRGS) is a start-up organization that provides academic institutions and companies with services for constructing virtual environments and generating synthetic data. Its service scope includes, but is not limited to,

embodied virtual environment construction, human-robot interaction data synthesis, business scenario solutions (feasibility assessments, decision support, and robot prototype manufacturing), generative digital twin, metaverse gaming solutions, and film industry simulation solutions.

What is Polar Research Station Platform?

The Polar Research Station (PRS) Platform is simulator project provided by PRGS Organization. We aim to address the critical challenge of gradually integrating generalist embodied agents into human society. To further explore the scenarios of human-robot integration, PRS Platform can simulate (build) a small-scale human community, to facilitate Embodied AI research, The PRS Platform can simulate various types of human communities to create different types of data, ranging from skill tasks and multimodal perception data to critical embodied human-robot interaction data. The CVPR 2024 Embodied AI Workshop PRS Challenge utilizes a customized version environment of the PRS platform. Overall, currently, the PRS platform supports the following features:

- A 3D interactive continuous environment supported by the physics engine, and we support many popular physics engines (e.g. PyBullet, MuJoCo, PhysX 5.0) for virtual environment construction.

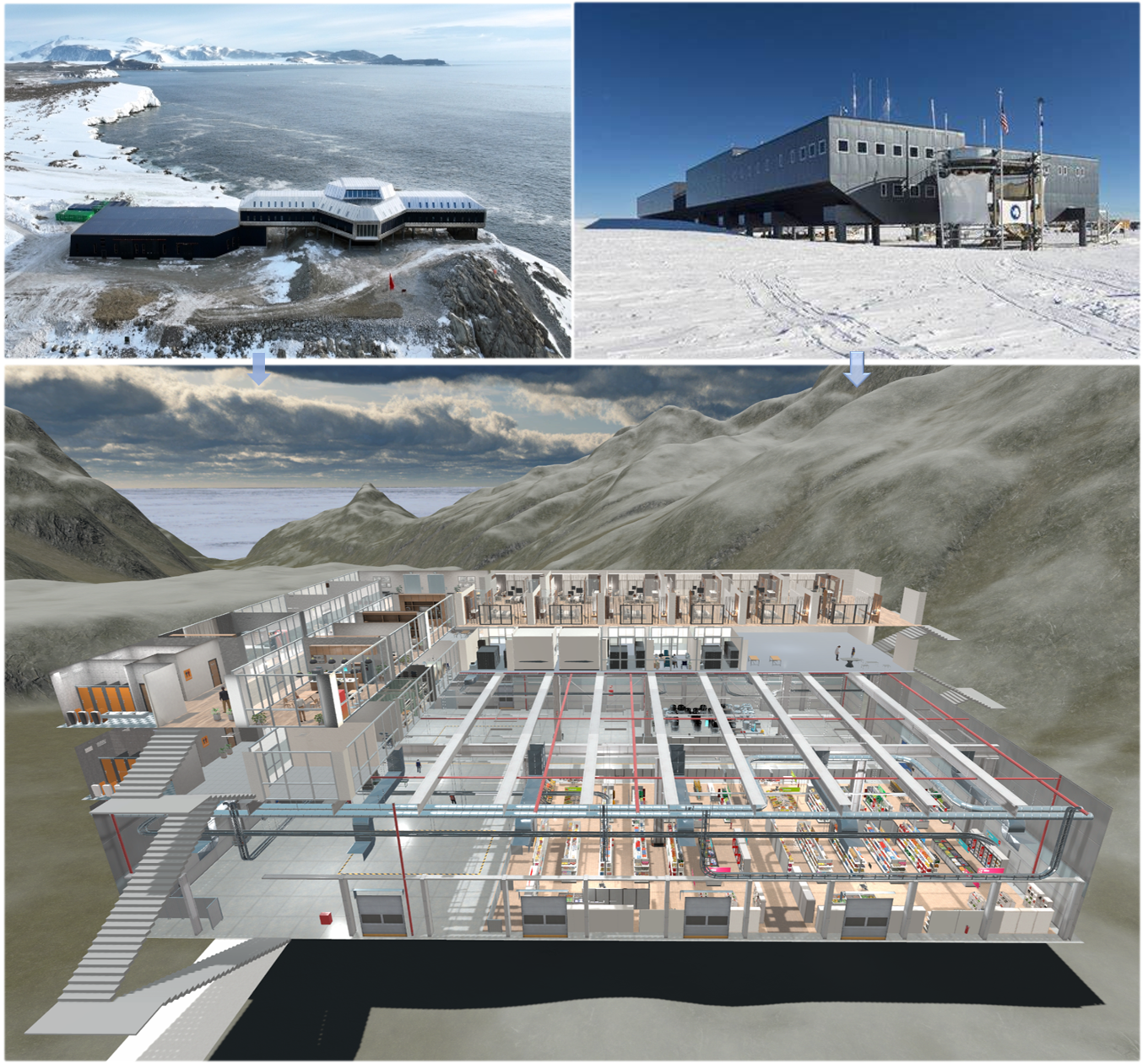

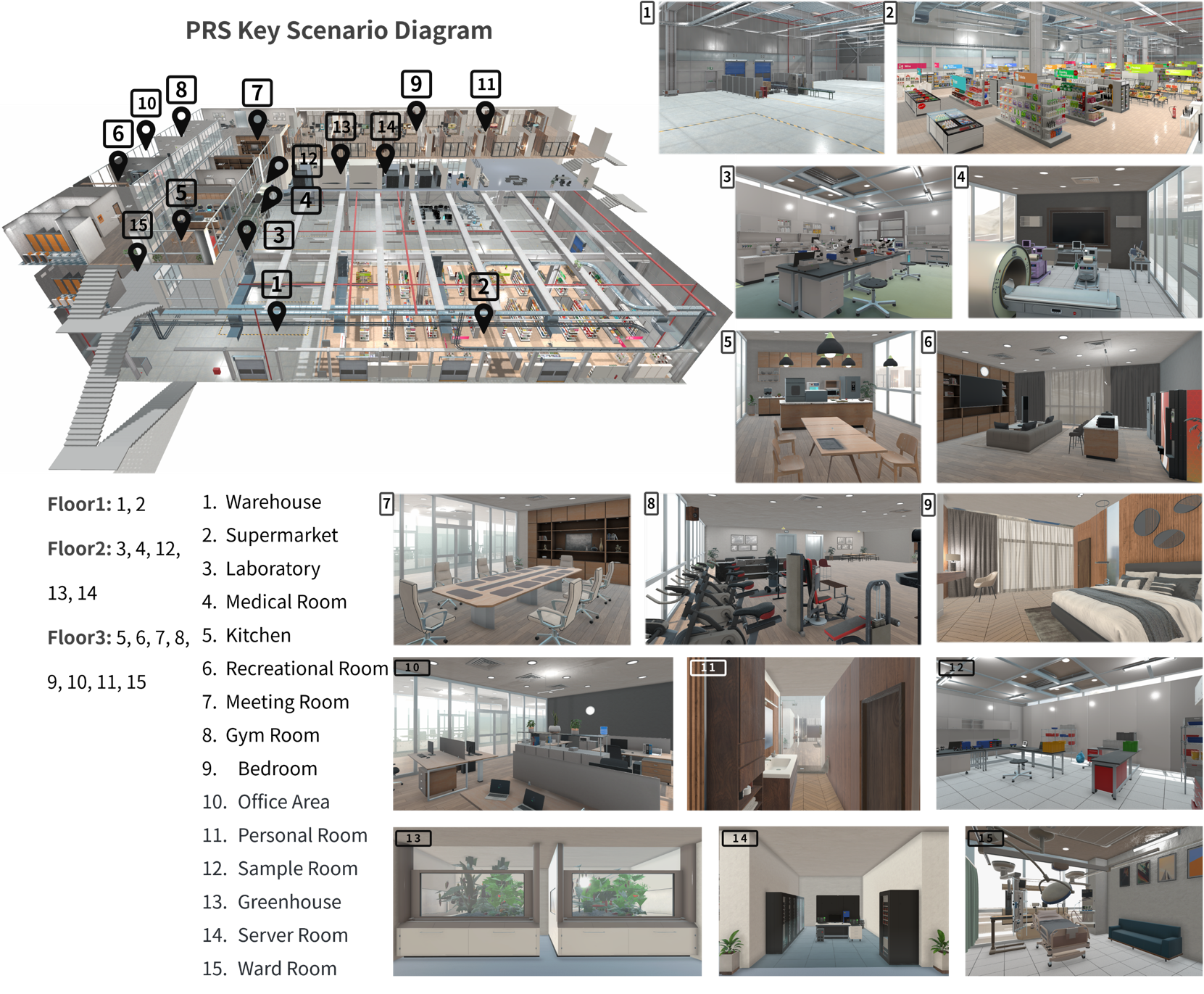

- Our framework can flexibly construct various styles of buildings and room layouts. For instance, the multi-story building (Figure 1) used in the challenge contains activity rooms, meeting rooms, kitchens, bedrooms, laboratories, greenhouses, warehouses, supermarkets, gyms, and other common scenes, as shown in Figure 2.

- Human characters (driven by Generative Agent Tech) with their own meaningful behavior and human-like needs (basic physiological, work, entertainment, social, etc.). For example, human characters will seek out their favorite food when hungry.

- Characters autonomously move through the building according to their needs, preferences, habits, states, and social relationships, as shown in Figure 3. We create an algorithm to ensure character behaviors closely resemble real human individuals from real world. This approach surpasses the efficacy of relying solely on LLM and digital twin technology. We are committed to making virtual human characters increasingly similar to real humans .

- Characters have their own emotional and facial expression system, which is mainly influenced by what happens around them.

- Characters accomplish their goals by interacting with massive objects ( Figure 4) and diverse interactive devices within the environment.

- The human community environment is designed to operate in a long-term and stable style, continuously generating human-robot interaction data for various scenarios and prerequisites. It can be used to fully test the reliability of embodied algorithms.

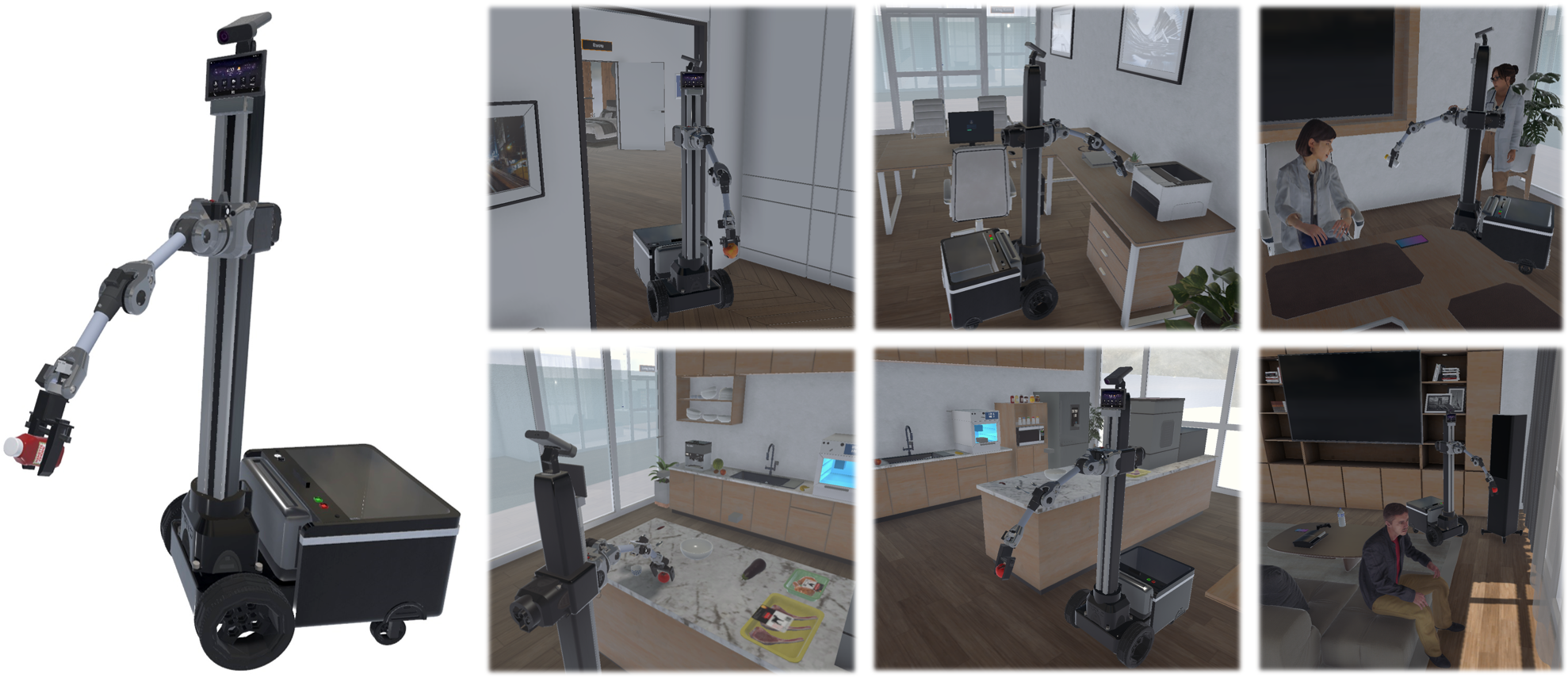

- In the simulator, We support robots to obtain perception signals from virtual environments through visual, tactile, auditory, temperature, humidity, and olfactory sensors. And robots can interact with the surrounding environment through physical means such as movement, grasping, and manipulation, as shown in Figure 6.

- In order to facilitate researchers from different fields and backgrounds (NLP, Computer Vision, Robotics, Reinforcement Learning, etc.) in using our platform, we provide various styles of APIs, ranging from ROS-style motion control to ALFRED-style semantic-level APIs. Furthermore, if you find using our platform inconvenient, please contact us.

- We have implemented a lightweight and flexible framework that makes our environment user-friendly, easily extendable, modifiable, and developable using only Python!

Figure 1: The PRS platform supports complex multi-story architectural structures interconnected by stairs and elevators. The building encompasses massive rooms with diverse functions and styles, constituting a virtual human living environment for human robot integeration exploration. The inspiration and setting of this building comes from the polar research stations in the real world (e.g., Polar Research Institute of China, Amundsen-Scott South Pole Station).

Figure 2: Present the observational perspectives of each room within the existing environment in the following sequence: warehouse, supermarket, sample room, medical room, kitchen, living room, meeting room, gym, bedroom, working space, office, laboratory, greenhouse, server room, etc.

Common scenarios with corresponding objects and functional devices are integrated into the PRS Environment.

Figure 3: Virtual characters live in the building and perform human-like behavior. As mentioned earlier, the behavior of humans in commercial scenarios needs emphasis. The activities of the robot actually revolve around human activities. Therefore, in our virtual environment, we support a human character system that controls goals, actions, and interactions. In this task, we mainly adopt various forms of daily activities (working, resting, simple socializing, etc.) to depict the actions of characters. Since the delivery task is closely related to the positions of characters, we primarily drive the movement of characters within the building based on a generative system.

Figure 4: The generative model-driven NPC is a human-like individual with its demands and corresponding behaviors.

Figure 5: Diverse items and furniture in the PRS Environment. Our virtual environment is primarily driven by a physics engine at its core, containing items with physical properties, so almost all movements are continuous (with exceptions for specific object state changes controlled by scripts and interfaces).

Figure 6: The embodied robot can move anywhere and manipulate objects with its arm. It is equipped with visual perception (RGB-D) and simple tactile perception based on rigid body collision. At the core, we have prepared various control methods for it. Users can control the robot either through an ALFRED-style interface (typically invoked by high-level action and LMMs with object segmentation) or through a ROS-like API.

Figure 7: Robots interact using articulated joints, changing the position of objects or the state of articulated devices. Furthermore, we allow a variety of robots (such as Panda, Unitree B1, H1, etc.) to interact within the environment.

--- Future Work ---

Various projects, such as social expressions, more interactive devices, and more environmental features (e.g., mirrors, as shown in Figure 8), are to be announced.

(1) Projector simulation. (2) Interactive devices for various purposes. (3) Monitoring and display screen simulations. (4) Mirrors that can reflect visual content.

Figure 8: NPC-device interaction and mirror effect.

We can customize the generation of the following scenarios according to requirements:1. High-precision physical simulation and various interactive objects (devices, see Figure 9).

2. Import the URDF file into our scenes to simulate the robot's physical interaction.

Figure 9: We have established a set of processes to customize interactive devices with physical capabilities.

3. Customizable rendering pipeline selection, as shown in Figure 10.4. Rapid customization of functional buildings to meet the needs of large-scale embodied commercial applications.

Figure 10: Customizable rendering pipeline to meet varying levels of demand.

To Everyone:

Before the full version is released, there are available versions for PRS Challenge and Trial Version.

(1) First and foremost, we are committed to permanently maintaining our platform and continuously integrating novel and engaging features.

(2) If you have an excellent idea, please do not hesitate to contact us. We are keen to collaborate with thoughtful institutions, individuals, and companies.

(3) If you find our work beneficial to you, please let us know. Alternatively, if you wish to support us, please get in touch with us directly.

(4) Finally, we sincerely hope to explore the future of generalist intelligence technology together with everyone through the PRS platform.